Skydio Autonomy

Make Skydio Autonomy your advantage.

For the past decade, we’ve built the skills of an expert pilot into our drones, so you can fly further, smarter, and with confidence—day or night. Powered by the world’s most advanced AI and sensors, Skydio Autonomy sees and solves complex navigation in real time. Intuitive, perceptive, and ready for action—so you can focus on what you do best.

How Skydio Autonomy works for you.

We do the flying. You focus on the task at hand.

Powered by advanced onboard computing, Skydio Autonomy sees, understands, and reacts in real time—day or night. With expert pilot instincts built in, our drones navigate the most complex environments, automatically avoiding obstacles as small as a ½-inch wire, so anyone can fly with confidence.

Eliminate blind spots.

With advanced navigation cameras, Skydio drones avoid obstacles a pilot may not see, even in the most complex environments.

Make the right decision, every time.

Trained on nearly a decade of flying hours, Skydio’s predictive AI makes the right decision in real time, mission after mission.

We fly the smartest route. You get time to prepare.

Skydio Pathfinder autonomously plans and executes the best flight path—factoring terrain, buildings, geofences, flight policies, and airspace regulations. In DFR Command or Remote Ops, simply select your destination, and Pathfinder charts the most effective route, adjusting to terrain elevation to maintain constant altitude AGL. Operators spend less time managing the flight, and more time preparing for the mission.

We autonomously fly in the dark. You extend operations 24/7.

A first-of-its kind nighttime navigation system lets you fly in darkness or in low-light environments, with computer vision interpreting flight paths and avoiding obstacles to extend autonomous capabilities 24/7.

Bring the power of autonomous flight to tight indoor inspections, poorly lit infrastructure, and nighttime operations, with capabilities that other drones can only dream of.

See in the dark.

Skydio X10 and R10 are the only drones that fly fully autonomously at night.

Active illumination.

Mission-ready lighting options deliver active infrared or visible illumination—tailored to the demands of every mission.

We track what matters. You make the right move.

Shadow autonomously keeps a consistent visual lock on a subject—people or vehicles—more reliably than manual piloting, so operators can focus on tactical decisions.

Maintain visual contact.

Shadow isn’t limited by daylight. It seamlessly transitions between color and thermal sensors to track subjects at night or in low-visibility environments.

Subject following that thinks ahead.

Shadow continuously predicts subject movement by analyzing speed, direction, and visual appearance, enabling it to reacquire tracking even when the subject is briefly hidden behind obstacles.

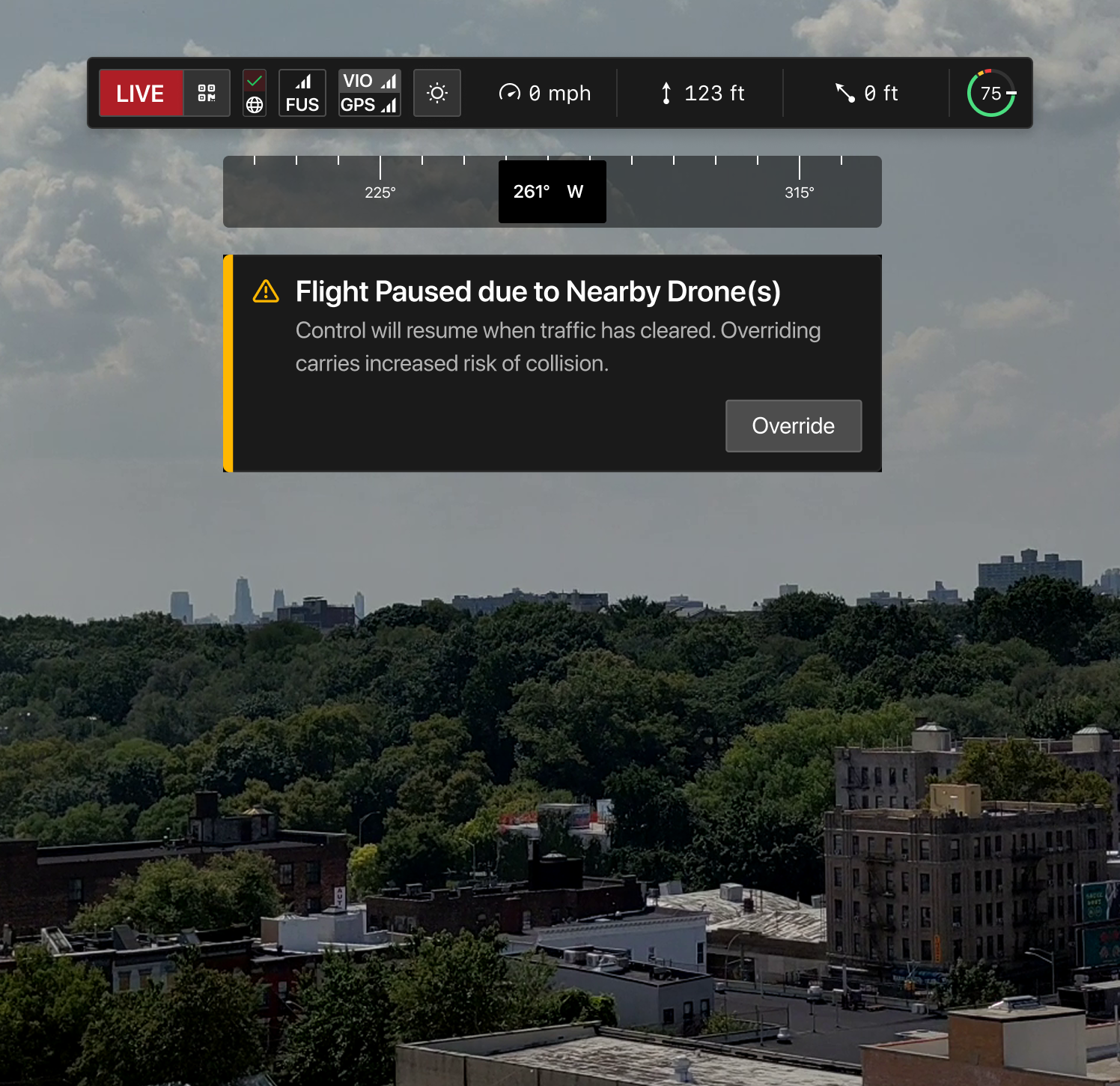

We keep drones clear of each other. You scale operations with confidence.

Skydio's multi-drone deconfliction system lets multiple X10s fly the same airspace safely and efficiently. Conflicts resolve automatically without pilot intervention, with real-time notifications keeping pilots informed of whether their drone is yielding or proceeding. This ensures teams can run simultaneous missions with confidence—while retaining full control.

Automatic collision avoidance.

Drones detect each other to create safe separation, preventing mid-air conflicts during multi-drone operations.

Prioritized flight control.

An intelligent priority system ensures the most mission-critical flights continue while others yield—keeping the right drones in the air at the right time.

We automate data capture. You get insights faster.

An advanced Spatial AI Engine provides Skydio drones complete awareness of their surroundings.

So you can repeat flights with centimeter-level consistency, conduct targeted inspections automatically, and build 2D and 3D models on the vehicle, in the field, in minutes. Complex data capture doesn't get more groundbreaking (or easier) than this.

3D Scan, made easy.

Generate digital twins, ready for export to photogrammetry applications.

See models instantly.

Evaluate models in the field to take immediate action and avoid costly repeat missions

We get there and get you home. All you do is press a button.

Navigate complex sites even where there's no GPS available, as in this parking garage. Ground-breaking algorithms help the drone reason in 3D space to build an understanding of the environment in mid air.

Because X10 constantly maps its environment as it flies through it, when the mission is complete, it knows the safest way back home.

Trustworthy autonomy is not an add-on. It's an architecture.